The EU Artificial

Intelligence Act:

A New Era of Regulation

The European Union (EU) is on the edge of making history with its proposed Artificial Intelligence Act (AI Act). As of August 2023, it is the world's first comprehensive legal framework for artificial intelligence. As final negotiations progress, the Act's implications for AI usage are anticipated to be significant, setting a new standard for AI regulation worldwide.

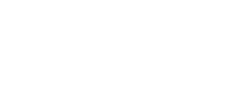

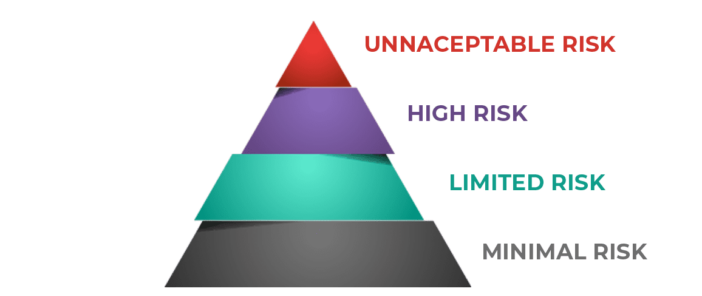

The EU AI Act proposes a multi-tiered classification system for AI applications, categorizing them based on their potential risk levels. The highest level, deemed an unacceptable risk, includes AI systems that pose potential threats to societal safety or infringe upon fundamental rights. These systems are set to be prohibited and include those that manipulate human behaviour, utilize real-time facial recognition, or engage in social scoring[1].

AI systems that pose a high risk, while not explicitly banned, will be subject to stringent regulation. These systems will be required to be registered in an EU database and undergo rigorous assessment procedures before deployment. This category encompasses AI systems that could negatively impact safety or fundamental rights[1].

AI systems presenting limited risk must adhere to minimal transparency guidelines. This provision's objective is to enable users to make informed decisions regarding their AI usage, promoting transparency and trust in AI technologies[1].

A RISK-BASED APPROACH

However, finalizing this proposed Act is not without its challenges. Contentious issues such as the use of AI for biometric surveillance, the definition of high-risk AI, and governance concerns have surfaced. Furthermore, businesses face hurdles in understanding and implementing the proposed stipulations, which introduce new requirements for data disclosure, energy reporting, risk mitigation, and evaluation standards[3].

The current draft also does not adequately address model use, supply chain factors, or model accessibility for researchers. Tech companies are also seeking further clarification on transparency requirements, model access for evaluation, and standards for impact assessments[3].

AI ACT: DIFFERENT RULES FOR DIFFERENT RISKS

Regardless of these challenges, the introduction of the proposed EU AI Act reflects the EU's commitment to spearheading the global discourse on AI ethics and regulation. Upon its finalization, the Act could serve as a model for other nations grappling with the widespread proliferation of AI technologies.

Alignment between the AI Act and sectoral legislation is critical to facilitate continued access to innovative healthcare

AI has great potential to improve patient outcomes and healthcare systems.

Pharma companies could use algorithms at all stages of the drug-development process: to identify which molecules could best target a specific disease; to select patients for clinical trials based on how they're expected to respond to a drug; and to extract trial data and complete forms for regulators. AI's predictive powers could even eliminate the need to test drugs on animals.

Some recommended approaches to using AI/ML technologies set out in the Reflection Paper include (4):

- Carry out a regulatory impact and risk analysis (the higher the risk, the sooner engagement with regulators is recommended)

- Employ traceable data acquisition methods that avoid the integration of bias.

- Maintain independence of training, validation and test data sets

- Implement generalizable and robust model development practices, following a risk-based approach

- Undertake performance assessments using appropriate metrics

- Follow ethical principles defined in the guidelines for trustworthy AI and presented in the Assessment List for Trustworthy

- Artificial Intelligence (ALTAI)

- Implement governance, data protection and data integrity measures

The proposed EU AI Act represents a monumental step forward in AI regulation. It signifies a significant shift towards a more ethical and controlled use of AI technology. As the EU edges closer to finalizing this Act, the world eagerly awaits its impact on AI's future. Balancing the benefits of AI advancement with ethical considerations is a complex task, but the EU's efforts may well lay the groundwork for a more responsible and transparent AI landscape.

References:

[1] Regulatory framework proposal on artificial intelligence

[2] The Four Risks of the EU's AI Act

[3] EU AI Act: Risk Categories - Dublin

(4) Europe’s race to get to grips with AI drugs